Georgia Tech Researchers Expose Hidden Cybersecurity Risk in Mobile Robots

January 23, 2026

Story by Tracie Troha | Photos by Mikey Fuller

As robots become essential for tasks ranging from warehouse logistics to disaster response, their reliance on networked communication makes them vulnerable to cyberattacks, particularly threats that leave no trace. A new study from Georgia Tech reveals how attackers can hijack mobile robots using simple mathematical tricks without triggering any alarms.

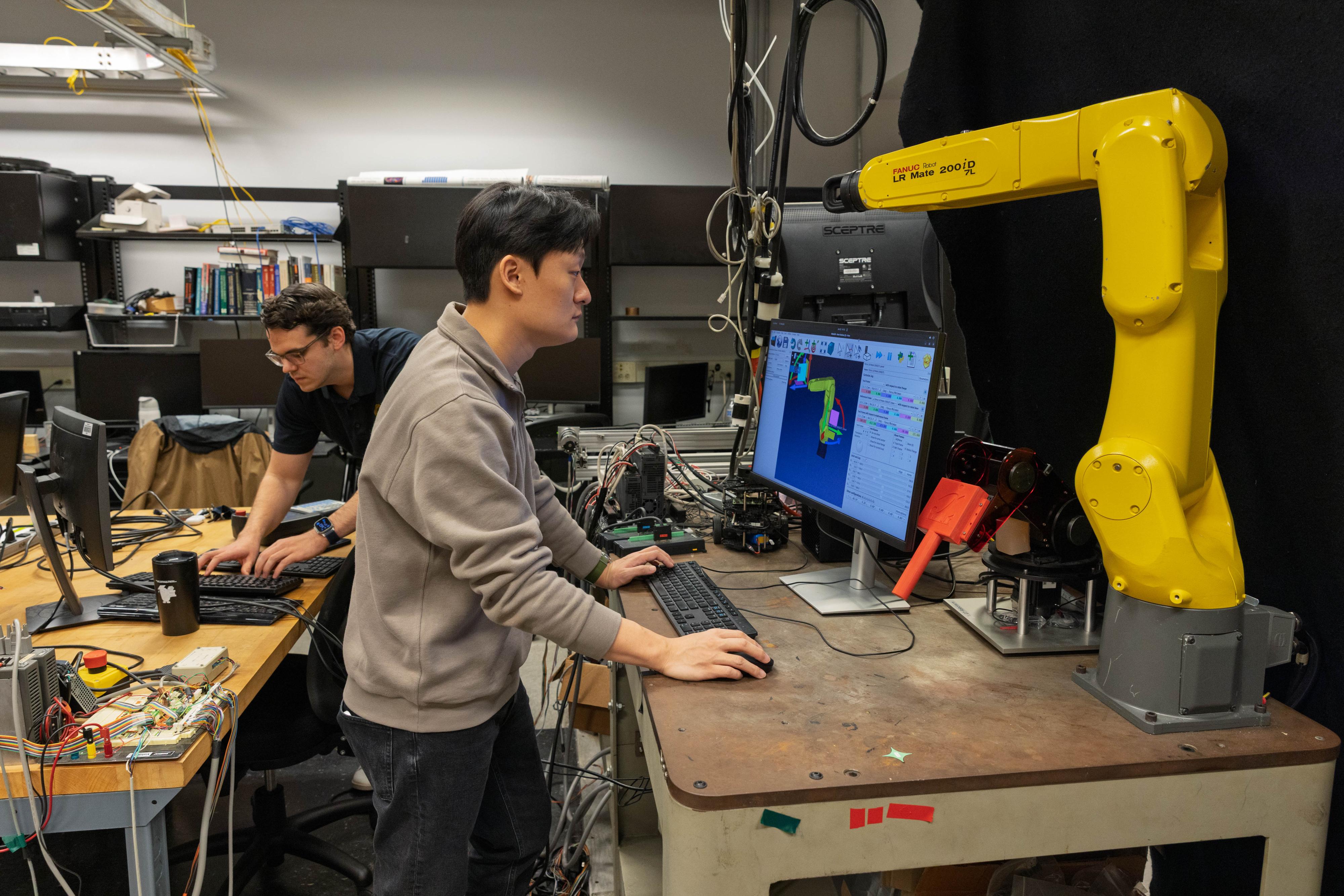

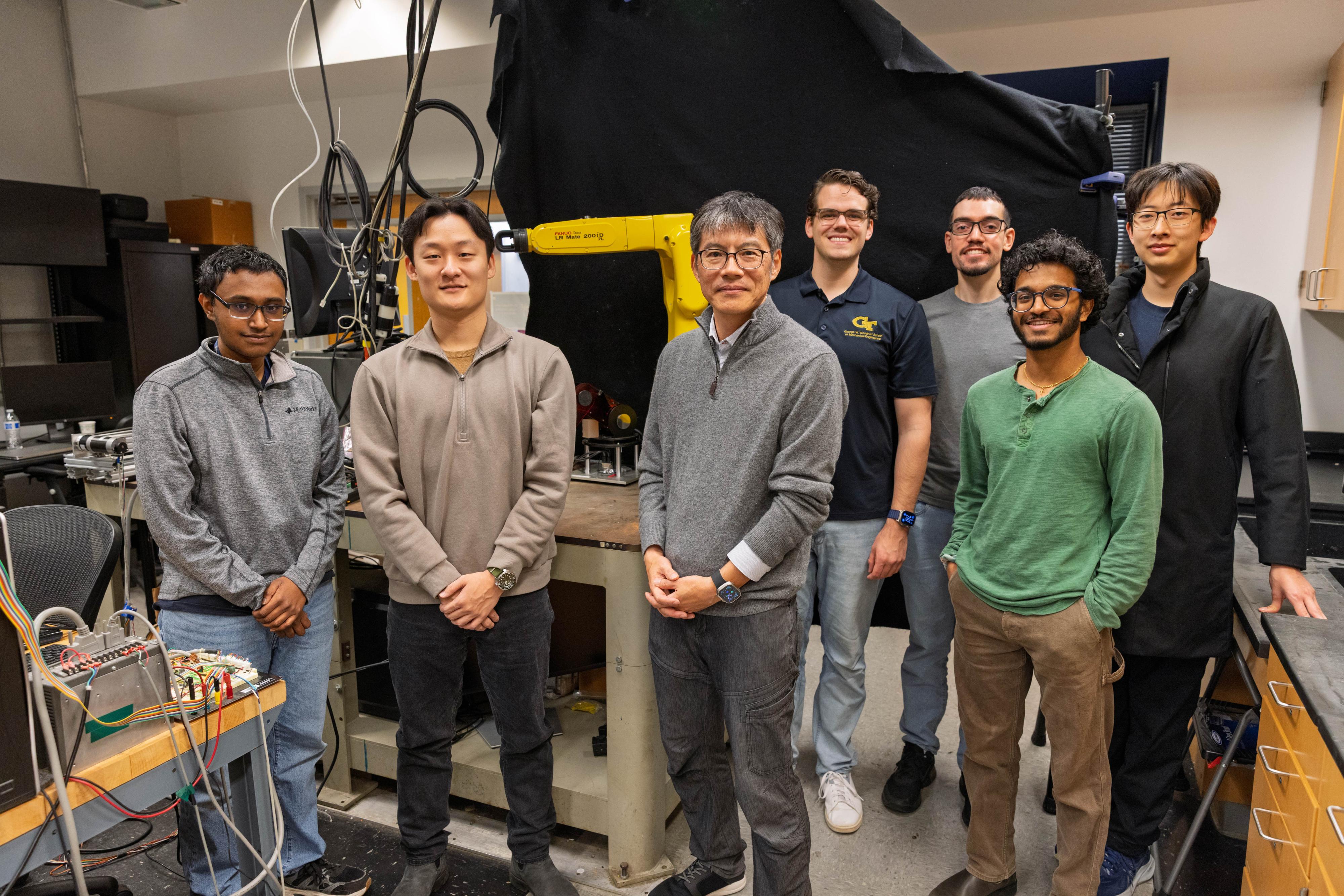

Published in IEEE Transactions on Robotics, a research paper by Jun Ueda, Harris Saunders, Jr. Professor in the George W. Woodruff School of Mechanical Engineering, and mechanical engineering Ph.D. student Hyukbin Kwon demonstrates that an undetectable cyberattack known as false data injection attacks (FDIAs) are not only possible but surprisingly easy to execute.

“Mobile robotic platforms are widely used in robotics and possess inherent dynamic symmetries that can act as vulnerabilities, allowing perfectly undetectable attacks in the worst case,” Ueda said.

When “Normal” Isn’t Normal

Modern robots constantly exchange data with remote controls, reporting their position and receiving movement commands. This connectivity enables autonomy, but it also opens the door to manipulation. In an FDIA, attackers inject false data into these communication channels, misleading the robot or its controller.

Ueda and Kwon’s study focuses on perfectly undetectable attacks. Unlike typical hacks, these attacks make the robot behave incorrectly while convincing the controller that everything is fine.

Their research shows that, rather than relying on complex algorithms or deep system knowledge, attackers can exploit basic mathematical operations called affine transformations, such as scaling (multiplying values) or reflection (flipping directions). By applying these affine transformations to both the robot’s commands and its reported position, hackers can alter its trajectory without raising suspicion.

For example, a scaling attack slows down or speeds up the robot, and a reflection attack mirrors its path, sending it into unintended areas.

“Traditionally, kinematic and dynamic symmetries are incorporated into many robotic systems to ease design and control complexities. Ironically, such symmetries are vulnerabilities,” Ueda said.

Proof in Practice

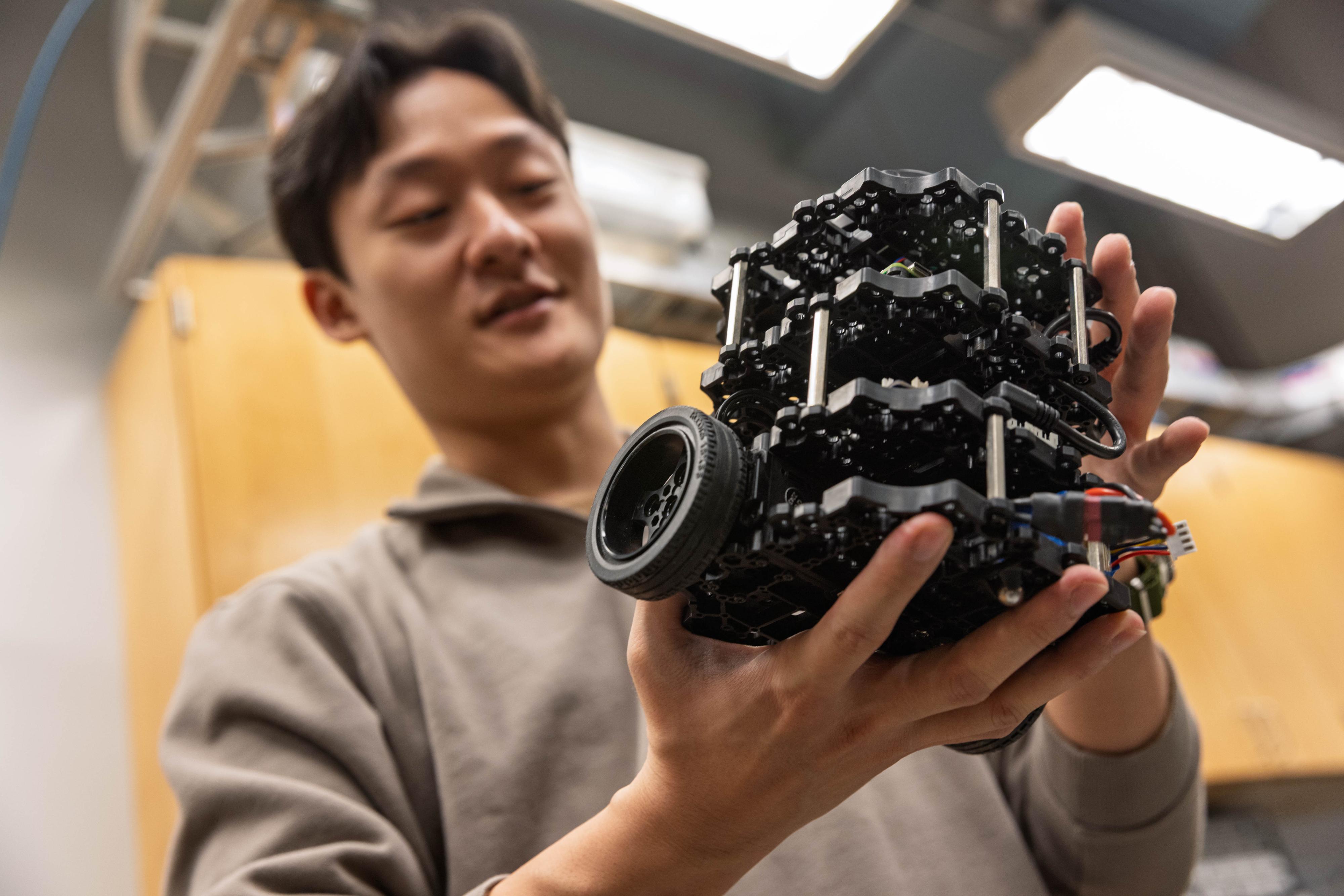

To validate their theory, the team tested attacks on a TurtleBot 3, a widely used mobile robot. The results showed that, when under attack, the robot followed a completely different path, yet the controller believed it was on track. Traditional detection methods failed because the compromised data matched expected patterns.

“The most surprising observation was how easily and elegantly attacks could be implemented with minimal knowledge requirements. The attacker only needed the structure of the dynamics, or Jacobian matrix, without requiring mechanical details like inertia, wheel size, or even controller parameters,” Ueda said. “Despite this limited knowledge, attacks can be successfully implemented to alter trajectories while maintaining perfect undetectability.”

A Path to Defense

In their paper, Ueda and Kwon propose a novel countermeasure called the state monitoring signature function (SMSF). SMSF is a mathematical tool that creates a unique signature of the robot’s state that is difficult to spoof using simple transformations.

While SMSF adds a layer of protection, it’s not foolproof. Attackers could eventually learn the signature through observation, underscoring the need for frequent updates.

The Critical Need for Robot Cybersecurity

With robots becoming increasingly integral to critical operations, a successful undetectable attack could disrupt supply chains, damage property, or even endanger lives.

The study highlights an urgent need for robust security strategies in cyber-physical systems. As robots grow smarter, so must their defenses.

“The research community should be aware of the symmetry-based attack methodology and must move beyond traditional cybersecurity toward security-aware control architecture design,” Ueda said.

Read the full paper, “Perfectly Undetectable Reflection and Scaling False Data Injection Attacks via Affine Transformation on Mobile Robot Trajectory Tracking Control,” in IEEE Transactions on Robotics.